Deploying a Scalable Data Science Environment Using Docker

Published in Data Science and Digital Business, 2019

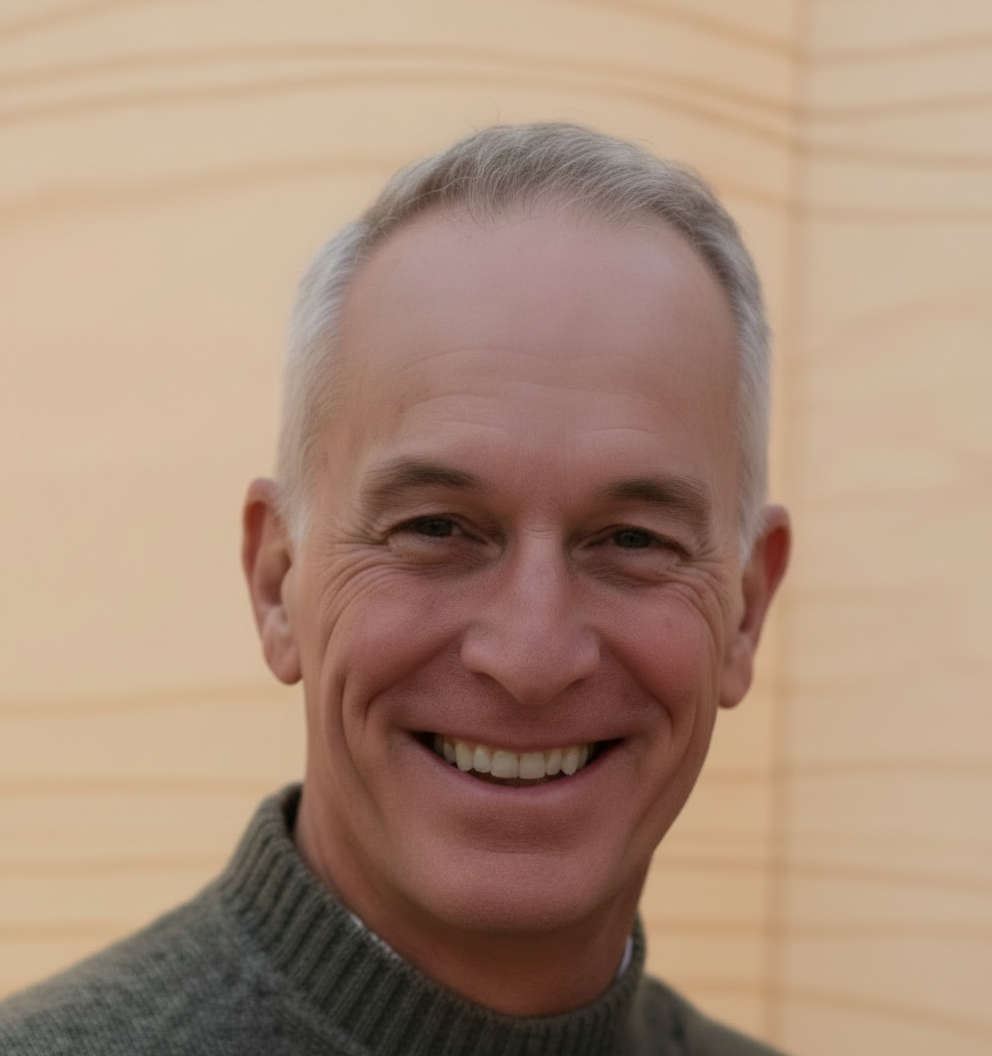

Recommended citation: Martín-Santana S, Pérez-González CJ, Colebrook M, Roda-García JL, González-Yanes P. "Deploying a Scalable Data Science Environment Using Docker". In: García Márquez F., Lev B. (eds) Data Science and Digital Business. Springer, Cham. Print ISBN: 978-3-319-95650-3, Online ISBN: 978-3-319-95651-0 (2019) https://doi.org/10.1007/978-3-319-95651-0_7

Abstract

Within the Data Science stack, the infrastructure layer supporting the distributed computing engine is a key part that plays an important role in order to obtain timely and accurate insights in a digital business. However, sometimes the expense of using such Data Science facilities in a commercial cloud infrastructure is not affordable to everyone. In this sense, we develop a computing environment based on free software tools over commodity computers. Thus, we show how to deploy an easily scalable Spark cluster using Docker including both Jupyter and RStudio that support Python and R programming languages. Moreover, we present a successful case study where this computing framework has been used to analyze statistical results using data collected from meteorological stations located in the Canary Islands (Spain).